The Perceptron Learning Algorithm (PLA) is one of the simplest Machine Learning (ML) algorithms. This post goes through an elementary example to illustrate how PLA works.

Notes on Perceptron Learning Algorithm

We start with some definitions and classification problem outline, next we move to the plain vanilla perceptron learning algorithm.

Linear Classifier

Consider set of points with first coordinate fixed to 1 and mapping

where

, which maps elements of

to two different classes denoted by elements of

. The two classes are assumed to be linearly separable, i.e. there exists vector

such that

where * is dot product on

. For the sake of the following discussion we will define

. Such mapping

is a linear classifier (on the set

). The set of the pairs

:

is called the training data set.

Perceptron Learning Algorithm (PLA)

Given a training data set , the goal of PLA is to find some

that provides correct classification on

, i.e. for all pairs in the training set holds

. Obviously, for a linearly separable training data sets such classifier is not unique.

The algorithm is iterative. On each iteration we will obtain new candidate value for , which we will denote as

and the corresponding linear mapping will be denoted as

.

Initialization. Assign .

Step .

- Compute values of

pairs on the training set until we find a misclassified pair, say, for

,

.

- Update the candidate using the rule:

.

Termination. Stop iterations when all points are correctly classified.

The intuition behind the update method (item 2) is that it adjusts to improve its performance on the selected misclassified point:

and since always , due to the first non-zero coordinate, the value of

is closer to having the same sign as

than

. Since each step improves only on a single training pair (and may actually make classification performance worse on some or all other pairs), it is not obvious why the PLA converges to a solution of the linear classification problem. The proof will be discussed in one of the future posts. Here we will use an extremely simple examples to illustrate the PLA.

Example: One-dimensional Training Data Set.

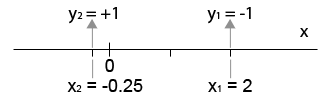

The training data set consists of two training points:

;

also shown below:

While this is probably the simplest example that can be devised to illustrate the PLA, it shows the idea behind it in a clear unobstructed way. By executing the PLA step-by-step we will first initialize .

Iteration 1. We obtain:

;

.

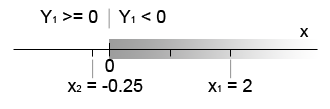

Note that classifies the entire range of real values of

as +1. Out of the two training data points only the first one

is misclassified: the desired value is -1 while

returns +1. This results in an update:

Iteration 2. With the new candidate we obtain the following values on the data points:

;

.

As a side note, the decision boundary between the two classes is now located at (while for

it was at -∞):

The value of decision boundary is obtained by solving equation:

or, equivalently,

.

The misclassified point leads to an update:

That moves decision boundary to the root of the equation i.e. to

and results in all training data points classified correctly:

Figure 3. Second iteration moves decision boundary to x=0. Both training data points are classified correctly.

References

The PLA is covered by many books and articles. The AML Book listed below is easily accessible, well written and has a corresponding MOOC.

[1] Yaser S. Abu-Mostafa, Malik Magdon-Ismail, Hsuan-Tien Lin. “Learning From Data” AMLBook, 213 pages, 2012.